The neural network

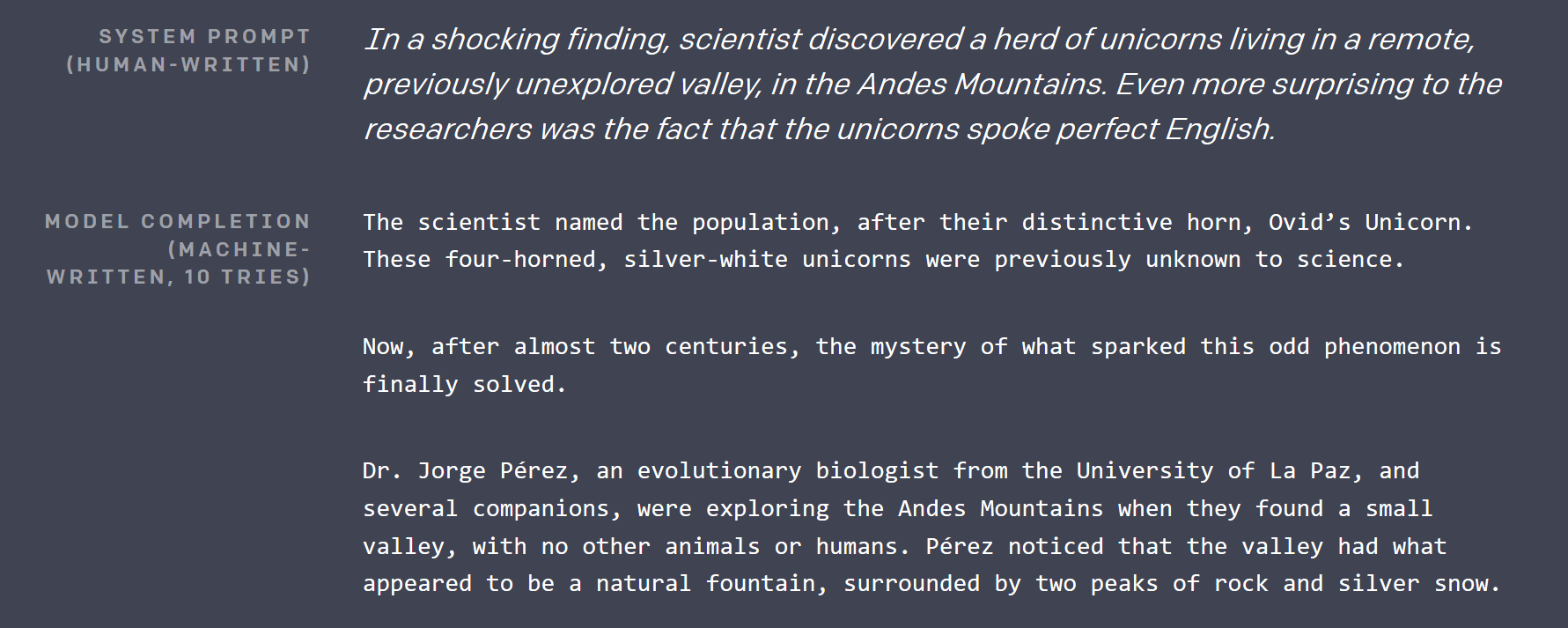

About 2.5 months ago OpenAI published a blog post, where they demonstrated nearly impossible: a deep learning model, that can write articles, indistinguishable from ones written by humans. The text it generated was so impressive, that I had to check the calendar to ensure its not an April Fool’s joke (mind you that was February, and Seattle was covered in snow).

They did not release the largest neural network with over 1 billion parameters they built as of today (a very controversial decision), but they open-sourced a smaller 117M parameters version on GitHub under MIT license. The model has a very unmemorable name: GPT-2.

So, about a month ago, when I was trying to think what cool project I could make with TensorFlow, that network became the starting point. If it already could generate English text, it should not have been too hard to fine-tune it to generate song lyrics, if there is a sufficiently large dataset.

How does GPT-2 work?

There are several important achievements in deep learning research, that made GPT-2 possible:

Self-supervised learning

This technique got its name finalized by Yan LeCunn only several days after I wrote the first version of this article. It is a very powerful technique, that can be applied to basically any kind of real-world data. To train GPT-2 OpenAI collected tens of gigabytes of articles from various sources, that were upvoted on Reddit.

Conventionally, one would have to have a human to go through all these articles, and, for example, mark them as “positive” or “negative”. Then they would teach a neural network in supervised manner to classify these articles the same way a human did.

The new idea here is that to create a deep learning model, that has a high-level understanding of your data, you simply corrupt the data, and task the model to restore the original. This makes the model understand connections between pieces of data, and their surrounding contexts.

Let’s take text as an example. GPT-2 takes a sample of the original text, picks 15% of tokens to be corrupted, then masks 80% of them (e.g. replaces with special mask token, usually ___), replaces 10% with some other random token from the dictionary, and keeps the remaining 10% intact. Take I threw a ball, and it fell to the grass. After corruption it might look like this: I threw car ball, and it ___ to the grass. In the layman terms, to get network restore the original, it needs to learn, that something thrown will likely fall, and that car ball is something very uncommon in the context.

A model trained like that is good on generating/completing partial data, but the high-level features it learned (as outputs of inner layers) can be used for other purposes by adding a layer or two on top of them, and fine-tuning only that new last layer on an actual, smaller, human-marked dataset in a conventional way.

Sparse self-attention

GPT-2 uses something called sparse self-attention. In the essence, it is a technique, that enables neural network processing large input to focus on some parts of it more than others. And the network learns where it should “look” during training. The attention mechanism is better explained in this blog post.

The sparse part in the title of this section refers to a restriction on which segments of input the attention mechanism can choose from. Originally attention could choose from the entire input. That caused its weight matrix to be O(input_size^2), which grows very quickly with the size of the input. Sparse attention usually restricts that in some way. For more information on that, take a look at another OpenAI blog post.

The attention in GPT-2 is multi-head. Imagine you could have an additional eye or two you could use to check what was in the last paragraph without stopping reading the current one.

Many more

Residual connections, Byte pair encoding, next sentence prediction, and many more.

Porting GPT-2 (and converting Python in general)

The original model code is in Python, but I am a C# guy. Fortunately, the source code is quite readable, and the crux of it is in just 5 files, maybe 500 lines total. So I created a new .NET Standard project, installed Gradient (a TensorFlow binding for .NET), and converted those files line by line to C#. That took me about 2 hours. The only pythonic thing left in the code was the use of Python regex module from pip (the most commonly used package manager for Python), as I did not want to waste time learning the intricacies of Python regular expressions (as if it was not enough to deal with .NET ones already).

Mostly the conversion consisted of defining similar classes, adding types, and rewriting Python list comprehensions into corresponding LINQ constructs. In addition to LINQ from standard library, I used MoreLinq, that slightly expands what LINQ can do For example:

bs =

list(range(ord("!"), ord("~")+1))

+ list(range(ord("¡"), ord("¬")+1))

+ list(range(ord(""), ord("ÿ")+1))turned into:

var bs = Range('!', '~' - '!' + 1)

.Concat(Range('¡', '¬' -'¡' + 1))

.Concat(Range('', 'ÿ' - '' + 1))

.ToList();Another thing I had to fight with was a discrepancy between the way Python handles ranges, and the new Ranges and indices feature in the upcoming C# 8, which I discovered while debugging my initial runs: in C# 8 the end of the range is inclusive, while in Python it is exclusive (to include the very last element in Python you have to omit the right side of .. expression).

There are two hard things in computer science: cache invalidation, naming things, and off-by-one errors.

Unfortunately, the original source drop did not contain any training or even fine-tuning code, but Neil Shepperd provided a simple fine-tuner on his GitHub, which I had to port too. Anyway, the result of that effort is a C# code, that can be used to play with GPT-2, is now a part of Gradient Samples repository.

The point of the porting exercise is two-fold: after porting one can play with the model code in his favorite C# IDE, and to show, that it is now possible to get state-of-the-art deep learning models working in custom .NET projects shortly after release (between the code drop of GPT-2 and the first release of Billion Songs — just a bit over a month).

Fine-tuning to song lyrics

There are several ways one could get a large corpus of song lyrics. You could scrape one of the Internet websites hosting it with a HTML parser, pull it out from your karaoke collection, or mp3 files. Fortunately, somebody did it for us. I found quite a few prepared lyrics datasets on Kaggle. “Every song you have heard” seemed to be the largest. Trying to fine-tune GPT-2 to it, I faced two problems.

CSV reading

Yes, you read it correctly, CSV parsing was a problem. Initially, I wanted to use ML.NET, the new Microsoft library for machine learning, to read the file. However, after skimming through documentation, and setting it up, I realized, that it failed to process line breaks in the songs properly. No matter what I did, it struggled after a few hundred examples, and started mixing pieces of lyrics with titles and artists.

So I had to resort to a lower-level library, which I previously had better experience with: CsvHelper. It provides a DataReader-like interface. You can see the code using it here. Essentially, you open a file, configure a CsvReader, and then interleave call to .Read() with call(s) to .GetField(fieldName).

Short songs

Most of the songs are short in comparison to an average article in the original dataset used by OpenAI. GPT-2 training is more efficient on large pieces of text, so I had to bundle several songs up into continuous text chunks to feed them to the trainer. OpenAI also seemed to use this technique, so they’ve had a special token <|endoftext|>, that acts as a separator between complete texts within a chunk, and doubles as the start token. I bundled up songs until a certain number of tokens was reached, then returned the whole chunk to include in the training data. The relevant code is here.

Hardware requirements for tuning

Even the smaller version of GPT-2 is large. With 12GB of GPU RAM I could only set the batch size to 2 (e.g. train on two chunks at once, larger batch sizes improve GPU performance and training results). 3 would throw out of memory in CUDA. And it took half a day to tune it to the desired performance on my V100. The bonus is you can see the progress, as every now and then the training code outputs some generated samples, that start as plain simple text, and look more and more like song lyrics as the training progresses.

I have not tried it, but training on CPU will probably be very slow.

Pre-tuned model

As I was preparing this blog post, I realized it would be better not to force everyone to spend hours on fine-tuning the lyrics model, so I released pre-tuned one on the Billion Songs repository. If you are just trying to run Billion Songs, you don’t even have to download it manually. The project will do it for you by default.

And I swear you, I swear

You ruined it now, I hope you make it

And I hope your dream, I hope you dream, I hope you dream I hope you dream I hope you dream about

About

what I'm going. I'm going. I'm going. I'm going, I'm going, I'm going, I'm going, I'm going, I'm going, I'm going,

I'm going, I'm going, I'm going…

Making a website

OK! That looks like a song (sort of), now let’s make a web site!

Since I don’t plan to provide any APIs, I choose the Razor Pages template as opposed to MVC. I turned on authorization too, as we will allow users to vote for the best lyrics and have a Top 10 chart.

Rushing the MVP, I went ahead and created a Song.cshtml web page, whose goal for now will be to simply call GPT-2 and get a random song. The layout of the page is trivial, and basically consists of the song and its title:

@page "/song/{id}"

@model BillionSongs.Pages.SongModel</p>

@{

ViewData["Title"] = @Model.Song.Title ?? "Untitled";

}

<article style="text-align: center">

<h3>@(Model.Song.Title ?? "Untitled")</h3>

<pre>@Model.Song.Lyrics</pre>

</article>

Now because I like my code reusable, I created an interface, that will let me plug different lyrics generators later on, which will be injected by ASP.NET into SongModel.

interface ILyricsGenerator {

Task<string> GenerateLyrics(uint song, CancellationToken cancellation);

}

Omitting song title for now, all we need to do is to register Gpt2LyricsGenerator in Startup.ConfigureServices and call it from the SongModel. So let’s get started on the generator. And the first thing we need to ensure, is that we have

Repeatable lyrics generation

Because I made a bold statement in the title, that it is going to be over 1 billion songs, don’t even think about generating and storing all of them. First, without any metadata, that would take on its own over 1TB of disk space. Second, it takes ~3 minutes on my nettop to generate a new song, so it will take forever to generate all of them. And I want to be able to turn that billion into a quintillion by switching to Int64 if needed! Imagine we could make 1 cent per song, on a 1 quintillion songs? That would be more, than the world’s current yearly GDP!

Instead, what we need to do is to ensure, that GPT-2 generates the same song over and over again, given its id, which I specify in the route. For that purpose TensorFlow gives an ability to set the seed of its internal number generator at any time via tf.set_random_seed function like this: tf.set_random_seed(songNumber). Then I wanted to just call Gpt2Sampler.SampleSequence, to get the encoded song text, decode it, and return the result, thus completing Gpt2LyricsGenerator.

Unfortunately, upon the first try that did not work as expected. Every time I’d hit refresh button, a new unique text would be returned on the page. After quite a bit of debugging, I finally found out, that TensorFlow 1.X has significant issues with reproducibility: many operations have internal states, that are not affected by set_random_seed and are hard to reach to reset.

Reinitialization of the model variables helped offset that problem, but also meant, that the session must be recreated, and the model weights had to be reloaded on every call. Reloading a session of that size caused a giant memory leak. To avoid looking for its cause in TensorFlow C++ source code, instead of doing text generation in-process, I decided to spawn a new process with Process.Start, generate text there, and read it from the standard output. Until a way to reset the model state in TensorFlow is stabilized, this would be the way to go.

So I ended up with two classes: Gpt2LyricsGenerator, which implements ILyricsGenerator from above by spawning a new instance of BillionSongs.exe with command line parameters, that include song id, and eventually instantiates Gpt2TextGenerator, which actually calls GPT-2 to generate lyrics, and simply prints it out.

Now refreshing the page always gave me the same text.

Dealing with 3 minute time to generate a song

What a horrible user experience it would be! You go to a website, click “Make New song”, and absolutely nothing happens for 3 (!) minutes while my nettop takes its time to generate song lyrics you requested.

I solved this problem on multiple levels:

Pregenerating songs

As mentioned above, you can’t pregenerate all the songs, and serve them from a database. And you can’t just generate on demand, because that is to slow. So what can you do?

Simple! Since the primary way for users to see a new song is to click “Make Random” button, let’s pregenerate a lot of songs in advance, put them into a ConcurrentQueue, and let “Make Random” pop songs from it. While the number of visitors is low, the server will take time between them to generate some songs, that will then be readily accessible.

Another trick, that I used is to loop that queue several times, so that many users could see the same pregenerated song. One just need to keep a balance between the RAM usage, and how many times a user has to click on “Make Random” to see something he has seen before. I simply picked 50,000 songs as a reasonable number, which would take just 50MB of RAM, while providing quite a large number of clicks to go through.

I implemented that functionality in class PregeneratedSongProvider: IRandomSongProvider (the interface is injected into the code, responsible for handling “Make Random” button).

Caching

Pregenerated songs are cached to memory, but I also set HTTP cache header to public to let browser, and CDN (I use CloudFlare) cache it to avoid getting hit by a user influx.

[ResponseCache(VaryByHeader = "User-Agent", Duration = 3*60*60)]

public class SongModel: PageModel { … }

Returning popular songs

Most of the songs generated by fine-tuned GPT-2 in that manner are pretty dull, if not rudimentary. To make clicks on “Make Random” more engaging, I added a 25% probability, that instead of a completely random song you’ll get some song, that was previously upvoted by other users. In addition to increasing engagement it increases the chance, that you will request a song, cached either in the CDN, or in memory.

All of the tricks above are wired together using ASP.NET dependency injection in the Startup class.

Voting

There’s not much special about voting implementation. There is SongVoteCache, that keeps the counts up to date. And an iframe hosting the vote buttons on the song page, which allows the essential part of the page — title and lyrics to be cached, while the vote counts and login status are loaded later.

The end results

A demo version running on my nettop, fronted by CloudFlare (give it some slack, its Core i3) now frozen and moved to Azure App Service free tier.

The GitHub repository, containing source code, and instructions to run the website and tune the model.

Plans for the future/exercises

Generate titles

GPT-2 is very easy to fine-tune. One could make it generate song titles by prepending or suffixing every sample of lyrics from the dataset with an artificial token like <|startoftitle|>, followed by the title from the same dataset.

Alternatively, users could be allowed to suggest and/or vote for titles.

Generate music

Half the way through developing Billion Songs I thought it would be cool to download a bunch of MIDI files (that’s an old-school music format, that is much closer to text, than mp3s), and train GPT-2 on them to generate more. Some of those files even had text embedded, so you could get karaoke generation.

I know the music generation this way is very possible, because yesterday OpenAI actually went ahead a published an implementation of that idea in their blog. But, hooray, they did not do the karaoke! I found, that it is possible to scrape http://www.midi-karaoke.info for that purpose.

Gradient aka TensorFlow for .NET

Please, see our blog for any updates.